I am currently a Postdoc researcher at the Hong Kong Polytechnic University. I received my Ph.D. degree from Nanyang Technological University (NTU).

I focus on the intersection between reinforcement learning (RL) and autonomous electrified vehicles. From the methodology aspect, my study aims to harness human intelligence to optimize the training cost and performance of machine learning algorithms. The specific research points include human guidance-based reinforcement learning algorithms, behavior planning of autonomous vehicles, and data-driven energy management of electrified vehicles. I have published more than 30 papers in top journals and conferences.

🔥 News

- 2022.12: 🎉🎉 I was elected as a PolyU Distinguished Postdoc Fellow at the Hong Kong Polytechnic University.

- 2022.11: 🎉🎉 Our paper on confidence-aware reinforcement learning for electrified vehicles has been accepted by Renewable and Sustainable Energy Reviews (RSER)!

- 2022.10: 🎉🎉 Our paper on brain-inspired reinforcement learning for safe autonomous driving has been accepted by IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI)!

- 2022.09: 🎉🎉 We won the best paper runner-up award in ITSC 2023!

- 2022.09: 🎉🎉 Our paper on efficient reinforcement learning through preference-guided stochastic exploration has been accepted by IEEE Transactions on Neural Networks and Learning Systems (TNNLS)!

- 2022.08: 🎉🎉 Our team won the 2nd place award in Track 1, IEEE ITSS Student Competition in Pedestrian Behavior Prediction! We will give a talk at the IEEE ITSC 2023 Workshop.

- 2022.07: 🎉🎉 Our papers on predictive decision-making/planning have been accepted by the International Conference on Intelligent Transportation Systems (ITSC)!

- 2022.06: 🎉🎉 Our paper on human-guided reinforcement learning for autonomous navigation has been accepted by IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI)!

- 2022.06: 🎉🎉 Our paper on differentiable integrated prediction and planning has been accepted by IEEE Transactions on Neural Networks and Learning Systems (TNNLS)!

- 2022.05: 🎉🎉 Our paper on driver cognitive workload recognition has been accepted by IEEE Transactions on Industrial Electronics (TIE)!

- 2022.04: 🎉🎉 Our paper on energy-efficient behavioral planning of autonomous vehicles has been accepted by IEEE Transactions on Transportation Electrification (TTE)!

- 2022.03: 🎉🎉 Our paper on combining conditional motion prediction, inverse RL, and behavior planning has been accepted by IEEE Transactions on Intelligent Transportation Systems (TITS)!

- 2022.12: 🎉🎉 Our team won the 2nd Place in the Algorithm Track at Aliyun “Future Car” Smart Scenario Innovation Challenge official site.

- 2022.12: 🎉🎉 Our team won the Most Innovative Award and 3rd Place in both Track 1 and Track 2 at NeurIPS Driving SMARTS Competition! Check out our presentation on predictive decision-making at the official competition site.

- 2022.07: 🎉🎉 I was certified in the 2022 Imperial-TUM-NTU Global Fellows Programme: The role of robotics in well-being and the workplace!

- 2022.06: 🎉🎉 Two papers on behavioral decision-making based on safe RL and graph RL have been accepted by ITSC 2022!

- 2022.06: 🎉🎉 Our paper on RL with prioritized human guidance replay has been accepted by IEEE Transactions on Neural Networks and Learning Systems (TNNLS)!

- 2022.05: 🎉🎉 Our paper on human-in-the-loop RL has been accepted by Engineering, the official journal of the Chinese Academy of Engineering!

- 2022.04: 🎉🎉 Our paper on RL with expert demonstrations has been accepted by IV 2022!

📝 Publications

Highlight

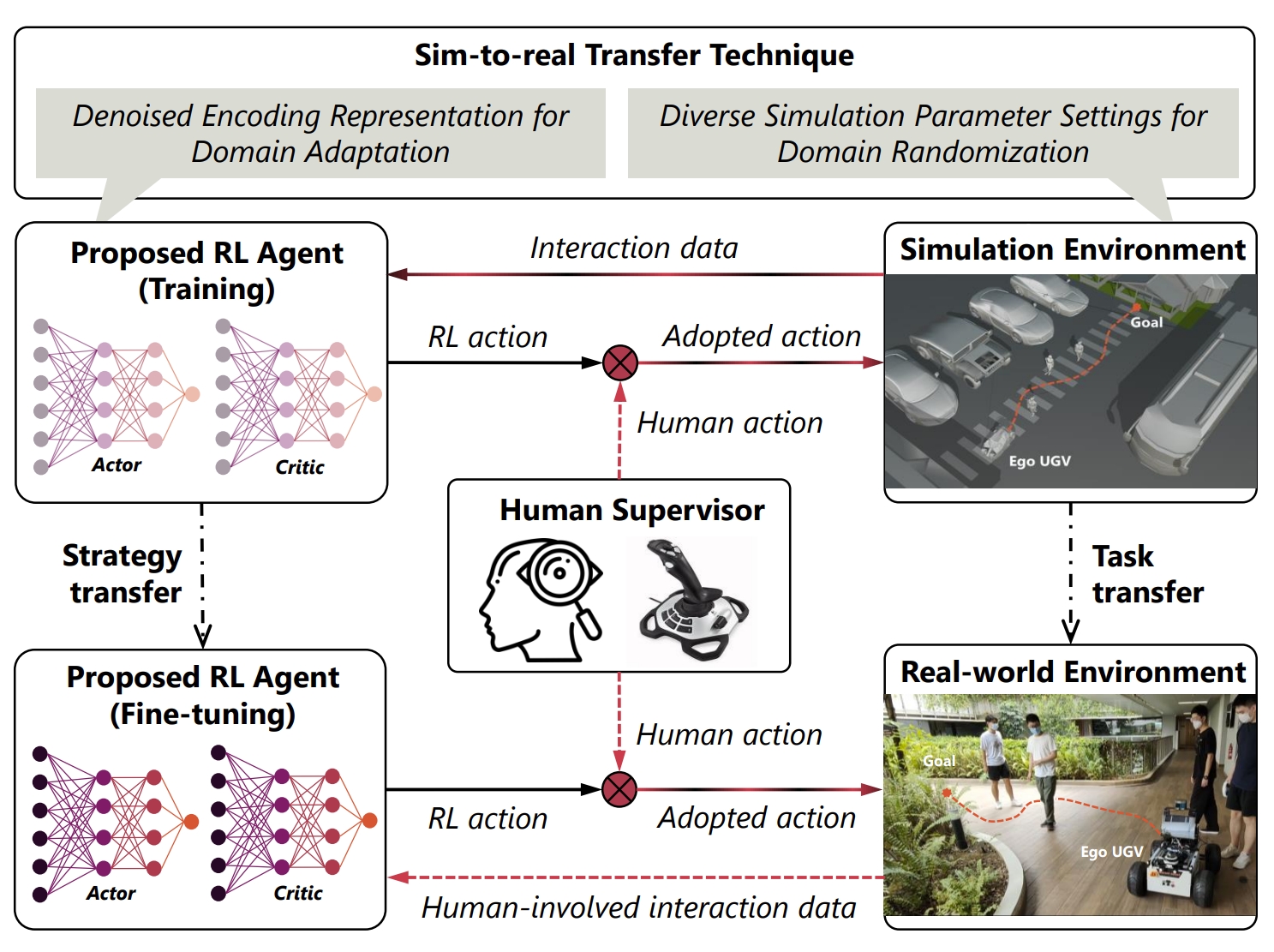

Human-guided Reinforcement Learning with Sim-to-real Transfer for Autonomous Navigation, Jingda Wu, Yanxin Zhou, Haohan Yang, Zhiyu Huang, Chen Lv

IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023 | Project

- We propose a human-guided RL framework for UGVs, incorporating a series of human guidance mechanisms to enhance RL’s efficiency and effectiveness during both simulations and real-world experiments. It demonstrates improved performance in goal-reaching and safety while navigating in diverse environments using tiny neural networks and image inputs, and showcases favorable physical fine-tuning ability via online human guidance.

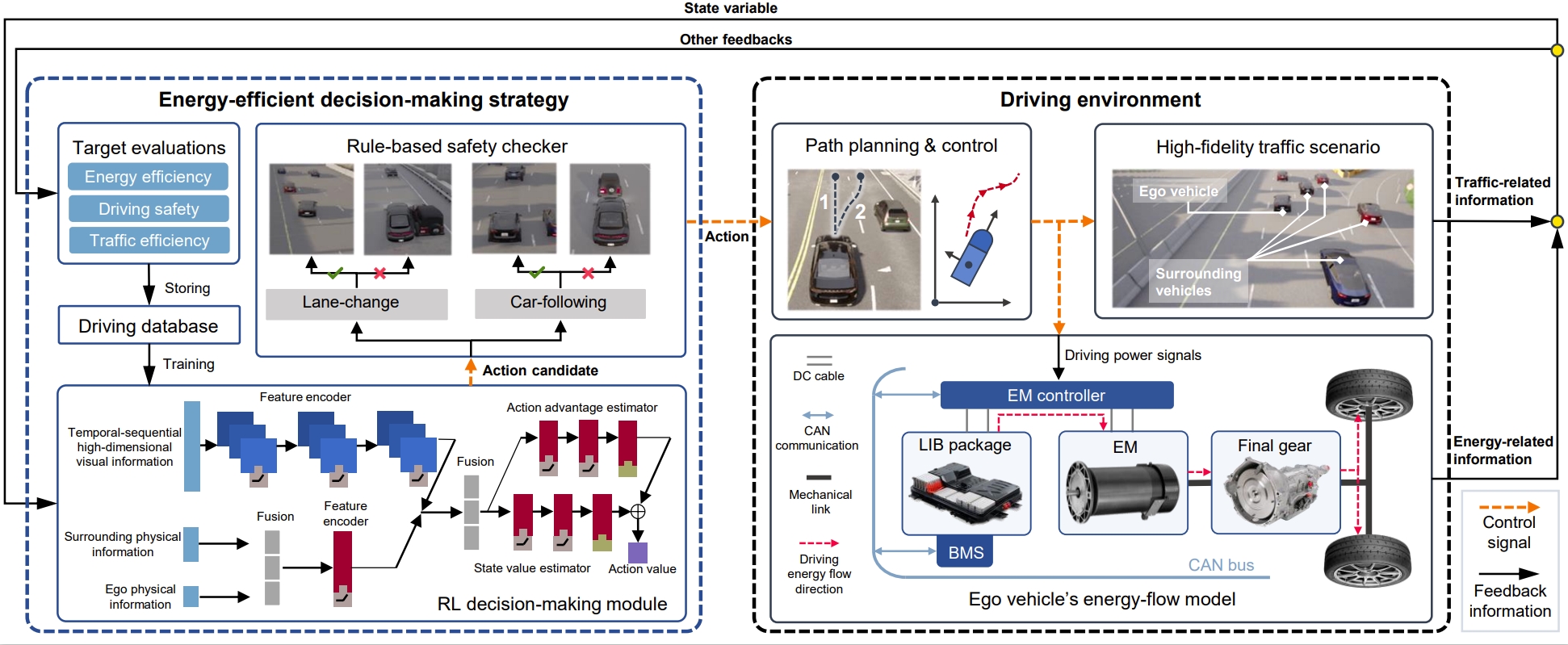

Deep Reinforcement Learning based Energy-efficient Decision-making for Autonomous Electric Vehicle in Dynamic Traffic Environments, Jingda Wu, Ziyou Song, Chen Lv

IEEE Transactions on Transportation Electrification, 2023

- We propose a deep RL strategy for autonomous EVs that takes as input BEV images and enhances energy efficiency through smart lane-changing and car-following behaviors, incorporating a safety checker system for safer lane changes.

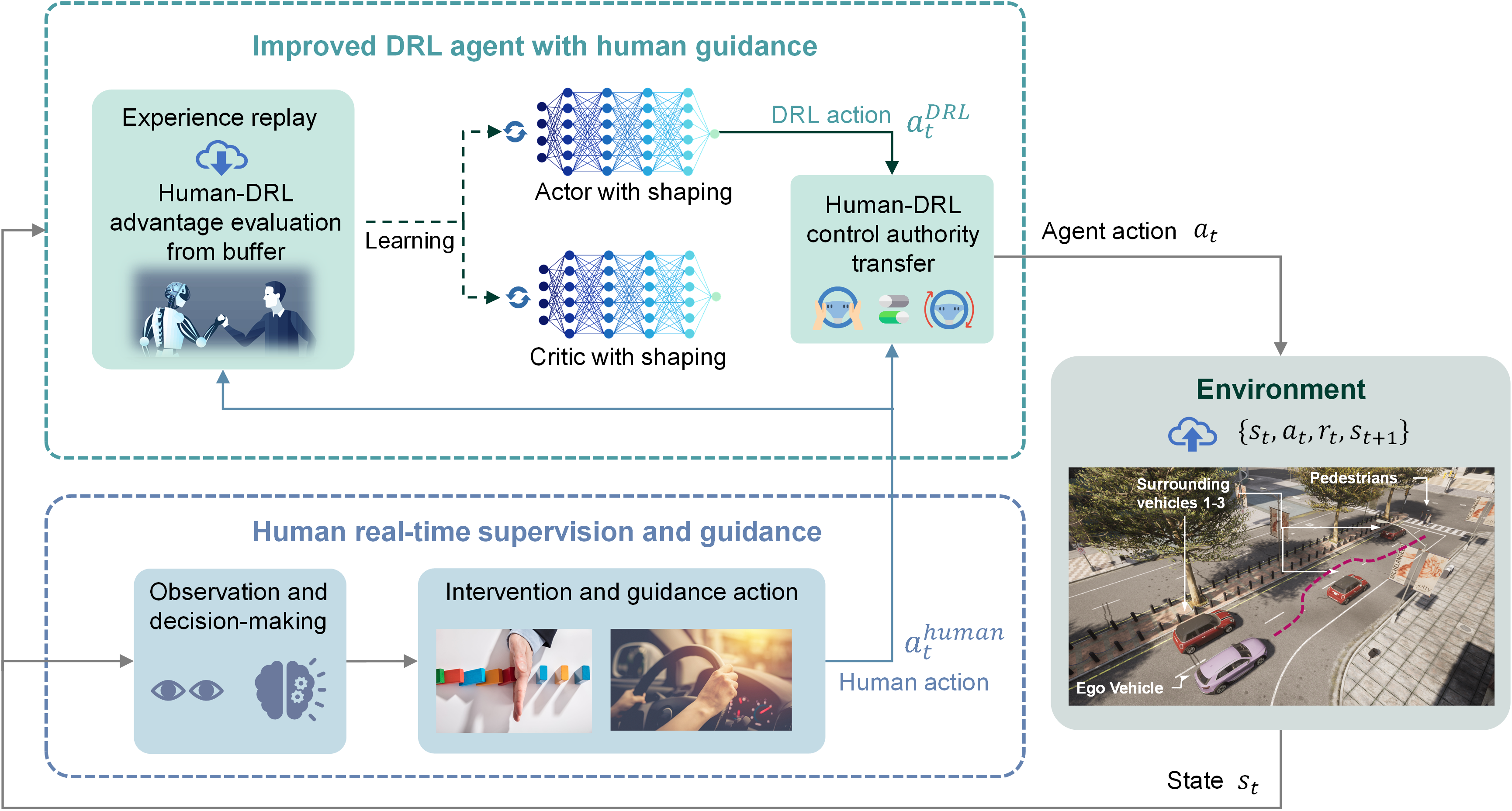

Towards Human-in-the-loop AI: Enhancing Deep Reinforcement Learning via Real-time Human Guidance for Autonomous Driving, Jingda Wu, Zhiyu Huang, Zhongxu Hu, Chen Lv

Engineering, 2022 | Project

- We propose a real-time human guidance-based deep reinforcement learning method for improving policy training performance. The newly designed actor-critic architecture enabled the DRL policy to approach human guidance, and a 40 subjects-involved human-in-the-loop experimental validation confirms its superior performance for end-to-end autonomous driving cases.

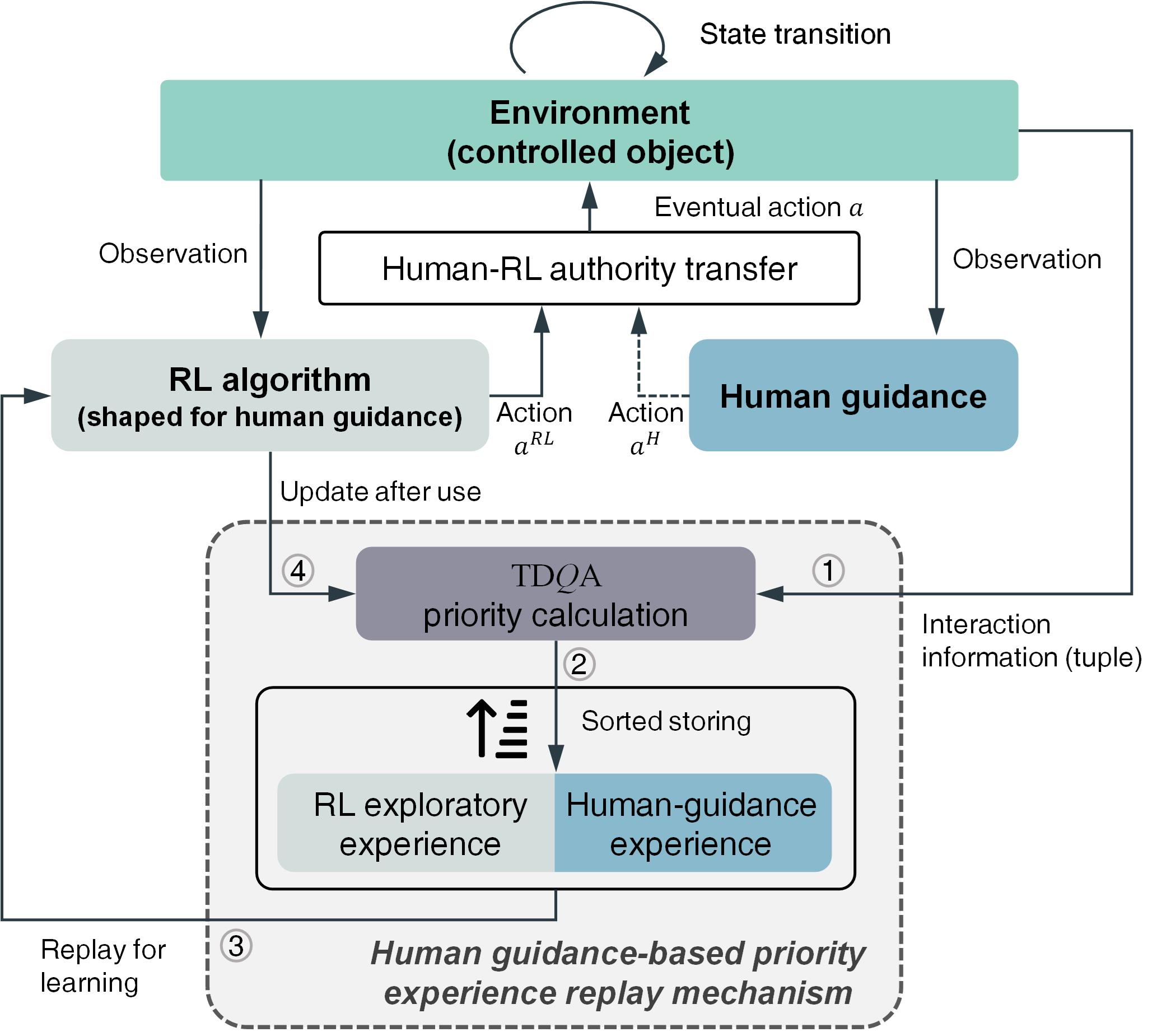

Prioritized Experience-based Reinforcement Learning with Human Guidance for Autonomous Driving, Jingda Wu, Zhiyu Huang, Wenhui Huang, Chen Lv

IEEE Transactions on Neural Networks and Learning Systems, 2022 | Project

- We propose a priority experience utilization method in the context of human guidance-based off-policy reinforcement learning (RL) algorithms, in which we establish an advantage measurement to highlight high-value human guidance data over the mixed experience buffer with both human demonstration and RL experience.

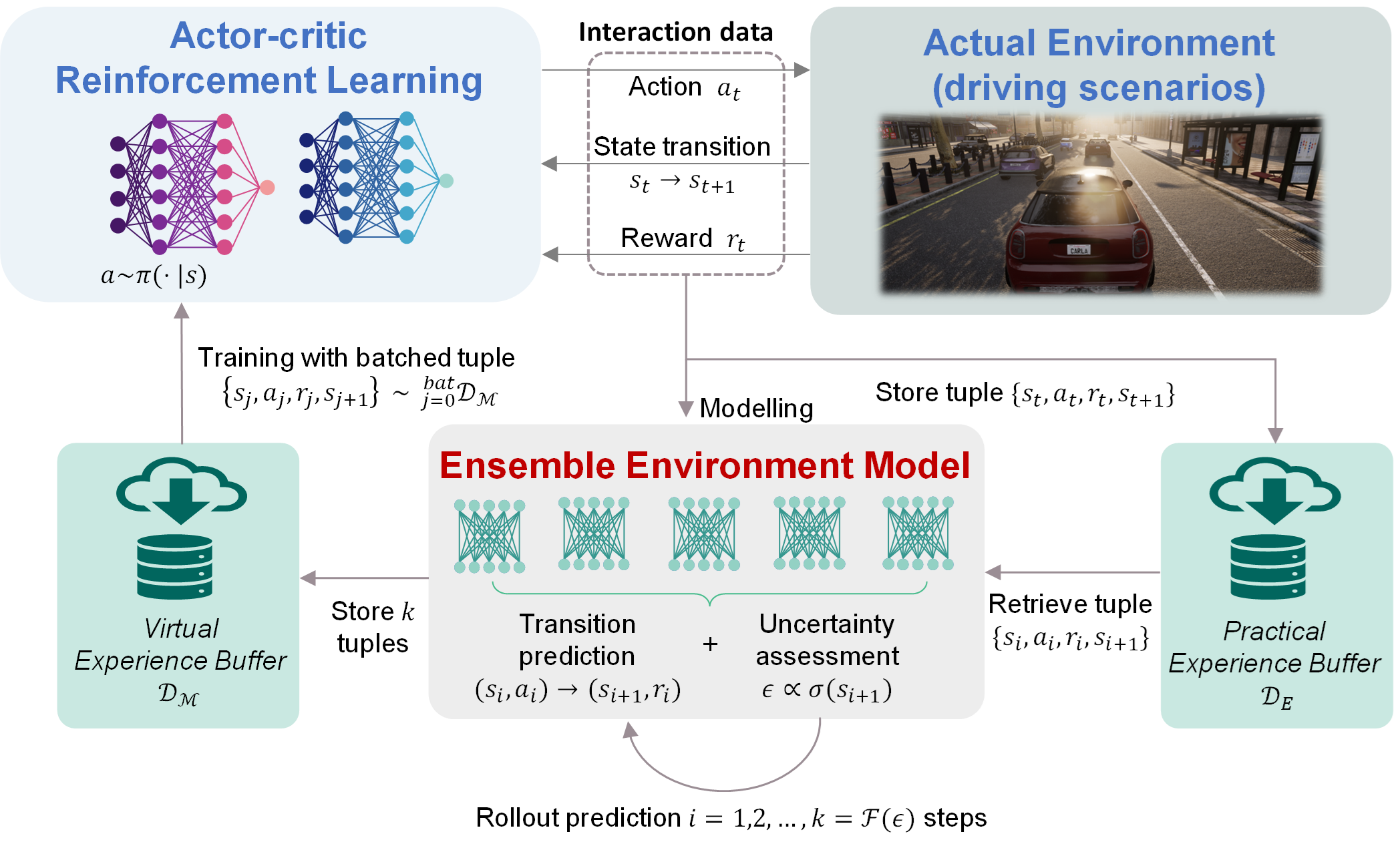

Uncertainty-Aware Model-Based Reinforcement Learning with Application to Autonomous Driving, Jingda Wu, Zhiyu Huang, Chen Lv

IEEE Transactions on Intelligent Vehicle, 2022 (ESI Highly Cited Paper)

- We propose an uncertainty-aware model-based RL method to improve the performance in the context of highly uncertain environment models. Based on an action-conditioned ensemble model, we develop an adaptive truncation approach to determine the utilization extent of the environment model and improve RL’s training efficiency and performance.

-

Sampling Efficient Deep Reinforcement Learning through Preference-Guided Stochastic Exploration. Wenhui Huang, Cong Zhang, Jingda Wu, Xiangkun He, Jie Zhang, Chen Lv. IEEE TNNLS, 2023

-

Real-time driver cognitive workload recognition: attention-enabled learning with multimodal information fusion. Haohan Yang, Jingda Wu, Zhongxu Hu, Chen Lv. IEEE TIE, 2023

-

Differentiable integrated motion prediction and planning with learnable cost function for autonomous driving. Zhiyu Huang, Haochen Liu, Jingda Wu, Chen Lv. IEEE TNNLS, 2023

-

Conditional predictive behavior planning with inverse reinforcement learning for human-like autonomous driving. Zhiyu Huang, Haochen Liu, Jingda Wu, Chen Lv. IEEE TITS, 2023

-

Recurrent Neural Network-based Predictive Energy Management for Hybrid Energy Storage System of Electric Vehicles. Jingda Wu, Zhiyu Huang, Chen Lv. IEEE VPPC, 2022 (Invited Paper for IEEE VTS Motor Vehicle Challenge Winner)

-

Safe Decision-making for Lane-change of Autonomous Vehicles via Human Demonstration-aided Reinforcement Learning, Jingda Wu, Wenhui Huang, Niels de Boer, Yanghui Mo, Xiangkun He, Chen Lv, IEEE ITSC, 2022

-

Graph Convolution-Based Deep Reinforcement Learning for Multi-Agent Decision-Making in Mixed Traffic Environments, Qi Liu, Zirui Li, Xueyuan Li, Jingda Wu, Shihua Yuan, IEEE ITSC, 2022

-

Improved Deep Reinforcement Learning with Expert Demonstrations for Urban Autonomous Driving, Haochen Liu, Zhiyu Huang, Jingda Wu, Chen Lv, IEEE IV, 2022

-

Efficient Deep Reinforcement Learning with Imitative Expert Priors for Autonomous Driving, Zhiyu Huang, Jingda Wu, Chen Lv, IEEE TNNLS, 2022

-

Driving Behavior Modeling using Naturalistic Human Driving Data with Inverse Reinforcement Learning, Zhiyu Huang, Jingda Wu, Chen Lv, IEEE TITS, 2021

-

Deep deterministic policy gradient-drl enabled multiphysics-constrained fast charging of lithium-ion battery, Zhongbao Wei, Zhongyi Quan, Jingda Wu*, Yang Li, Josep Pou, Hao Zhong, IEEE TIE, 2021 (ESI Highly Cited Paper)

-

Digital Twin-enabled Reinforcement Learning for End-to-end Autonomous Driving, Jingda Wu, Zhiyu Huang, Peng Hang, Chao Huang, Niels De Boer, Chen Lv, IEEE DTPI, 2021 (Outstanding Student Paper Award)

-

Personalized Trajectory Planning and Control of Lane-Change Maneuvers for Autonomous Driving, Chao Huang, Hailong Huang, Peng Hang, Hongbo Gao, Jingda Wu, Zhiyu Huang, Chen Lv, IEEE TVT, 2021

-

Confidence-based Reinforcement Learning for Energy Management of a Hybrid Electric Vehicle, Jingda Wu, Zhiyu Huang, Junzhi Zhang, Chen Lv, EVS34, 2021 (Excellent Paper Award)

-

Battery-involved energy management for hybrid electric bus based on expert-assistance deep deterministic policy gradient algorithm, Jingda Wu, Zhongbao Wei, Kailong Liu, Zhongyi Quan, Yunwei Li, IEEE TVT, 2021 (ESI Highly Cited Paper)

-

Battery thermal-and health-constrained energy management for hybrid electric bus based on soft actor-critic DRL algorithm, Jingda Wu, Zhongbao Wei, Weihan Li, Yu Wang, Yunwei Li, Dirk Uwe Sauer, IEEE TII, 2020 (ESI Hot Paper)

-

Multi-modal sensor fusion-based deep neural network for end-to-end autonomous driving with scene understanding, Zhiyu Huang, Chen Lv, Yang Xing, Jingda Wu, IEEE Sensors Journal, 2020

-

Energy management based on reinforcement learning with double deep Q-learning for a hybrid electric tracked vehicle, Xuefeng Han, Hongwen He, Jingda Wu, Jiankun Peng, Yuecheng Li, Applied energy, 2019 (ESI Highly Cited Paper)

-

Continuous reinforcement learning of energy management with deep Q network for a power split hybrid electric bus, Jingda Wu, Hongwen He, Jiankun Peng, Yuecheng Li, Zhanjiang Li, Applied energy, 2018 (ESI Hot Paper)

🎖 Recent Honors and Awards

- 2023.08 2nd Place Winner at Track 1, IEEE ITSS Student Competition in Pedestrian Behavior Prediction

- 2022.12 2nd Place Winner at Algorithm Track, Aliyun “Future Car” Smart Scenario Innovation Challenge

- 2022.12 3rd Place Winner at Track 1 and Track 2, Most Innovative Award, NeurIPS Driving SMARTS Competition, NeurIPS Competition Track

- 2022.07 Participant, Imperial-TUM-NTU Global Fellows Programme

- 2022.03 Winner, IEEE VTS Motor Vehicles Challenge

- 2021.06 Excellent Student Paper Award in IEEE International Conference on Digital Twins and Parallel Intelligence (DTPI), 2021

- 2021.06 Excellent Paper Award in 34th World Electric Vehicle Symposium & Exhibition (EVS34), 2021

📖 Educations

- 2019.08 - 2023.04, Doctor of Philosophy, Robotics and Intelligent Systems, Nanyang Technological University, Singapore

- 2016.09 - 2019.06, Master of Science (by Research), Mechanical Engineering, Beijing Institute of Technology, Beijing, China

- 2012.09 - 2016.06, Bachelor of Science, Vehicle Engineering, Beijing Institute of Technology, Beijing, China

💻 Internships

- 2018.05 - 2018.09, Visiting Scholar, University of Waterloo, Canada

📚 Academic Services

Guest Editor:

- Journal of Advanced Transportation, Special Session on Interaction, Cooperation and Competition of Autonomous Vehicles

- IET Renewable Power Generation, Special Session on Smart energy storage system management for renewable energy integration

Journal Reviewer:

- IEEE Transactions on Neural Networks and Learning Systems

- IEEE Transactions on Intelligent Transportation Systems

- IEEE Transactions on Intelligent Vehicles

- IEEE Transactions on Industrial Electronics

- IEEE Transactions on Industrial Informatics

- IEEE Transactions on Transportation Electrification

- IEEE Transactions on Vehicular Technology

- IEEE Transactions on Automation Science and Engineering

- IEEE Transactions on Cognitive and Developmental Systems

- IEEE Internet of Things Journal

- IEEE/CAA Journal of Automatica Sinica

- IEEE Intelligent Transportation Systems Maganize

- IEEE Vehicular Technology Magazine

- Engineering Applications of Artificial Intelligence

- Renewable and Sustainable Energy Reviews

- Applied Energy

- Proceedings of IMechE, Part D, Journal of Automotive Engineering, et al.

Conference Reviewer:

- ICRA’23, IROS’23, ITSC’23, IV’23, IV’22, ITSC’22, et al.